"Targeting" File Parsers with S2E and Kaitai Struct

2017-10-23

Introduction

Recently I’ve been playing around with file parsers in S2E. This typically

involves calling s2ecmd symbfile to make the parser’s input symbolic and then

running S2E to explore different paths through the parser. However, this is a

relatively heavy-handed approach; it makes the entire input file one big

symbolic blob, which quickly causes

path explosion.

Additionally, we may only be interested in exploring paths that exercise

specific functionality.

So how can we achieve more targeted symbolic execution on file-based programs

such as parsers? One approach could be to write a custom S2E plugin that

handles the onSymbolicVariableCreation event and intercepts

s2ecmd symbfile. You could then write C++ code that iterated over the

symbolic data and adjusted what was symbolic/concrete. The downsides of this

approach should be evident: it is time consuming and error prone to write C++

code to do this; it requires knowledge of the input file format; and you would

have to repeat this process for different file formats. Can we do better?

Kaitai Struct

Let’s briefly diverge from S2E and discuss Kaitai Struct.

Kaitai Struct is a tool for developing parsers for binary structures. It

provides a YAML-like language that allows for the concise definition of a

binary structure. The Kaitai Struct Compiler (ksc) then generates a parser

from this definition. This parser can be generated in a number of languages,

including C++, Python and Java.

Here is a partial definition of the ELF format in Kaitai Struct (taken from the

format gallery). It consists of a

number of “attributes” (e.g. magic, abi_version, etc.) which describe an

ELF file:

meta:

id: elf

title: Executable and Linkable Format

application: SVR4 ABI and up, many *nix systems

license: CC0-1.0

ks-version: 0.8

seq:

# e_ident[EI_MAG0]..e[EI_MAG3]

- id: magic

size: 4

contents: [0x7f, "ELF"]

# e_ident[EI_CLASS]

- id: bits

type: u1

enum: bits

# e_ident[EI_DATA]

- id: endian

type: u1

enum: endian

# e_ident[EI_VERSION]

- id: ei_version

type: u1

# e_ident[EI_OSABI]

- id: abi

type: u1

enum: os_abi

- id: abi_version

type: u1

- id: pad

size: 7

- id: header

type: endian_elf

I strongly recommend reading the Kaitai Struct documentation to get the most out of this post, as I will skip much of the detail here (mostly because I’m not really an expert myself!). Nevertheless, there is one feature that is worth singling out: the “processing spec”.

The processing spec allows you to apply a custom function that will “process” an attribute in some way. For example, an attribute may be encrypted/encoded. A processing spec can be applied to decrypt/decode this attribute at run time.

How is this relevant to symbolic execution? Suppose that we had an

s2e_make_symbolic processing spec, and by applying this spec to particular

attributes we would make only those parts of the input file symbolic. This

would give us more fine-grained control over S2E’s state space and potentially

reduce the path explosion problem. All we need is a way to combine S2E and

Kaitai Struct to make this possible!

Combining S2E and Kaitai Struct

We will use the Lua programming language to combine S2E

and Kaitai Struct. Using Lua allows us to reuse existing components — S2E

contains an embedded Lua interpreter (for parsing the S2E configuration file

and writing function/instruction

annotations),

while ksc is capable of generating Lua parsers. Thus, we can use ksc to

generate a Lua parser for our input file and embed this parser within the S2E

configuration file to make it accessible to S2E. (We could have used ksc to

generate a C++ parser, but this would require recompiling S2E every time we

wanted to use a different file format.) By selectively applying the

s2e_make_symbolic processing spec in our input definition, we can achieve

more targeted symbolic execution.

The remainder of this blog post will walk through how I combined S2E and Kaitai Struct. I’ll use the ELF definition (discussed previously) and readelf as a practical example.

In an effort to make it easier for others to play with the code, I’ve tried to

make it as stand-alone as possible — there are no modifications to S2E’s core

engine or ksc. However, this means that the code is far from polished! The

code consists of the following components:

- A command-line tool (

s2e_kaitai_cmd) that executes in the guest OS. This tool reads the input file and invokes an S2E plugin to selectively make the file symbolic; - An S2E plugin (

KaitaiStruct) that invokes Lua code to run a parser generated byksc; and - A small amount of Lua glue between the S2E configuration file and the parser

generated by

ksc.

Each of these components are described below. The complete code is available here.

The s2e_kaitai_cmd tool

At the start of this post I mentioned that we would normally use

s2ecmd symbfile to make an input file symbolic. The symbfile command makes

an input file symbolic by:

- Opening the input file in read/write mode

- Reading the input file into a buffer

- Calling

s2e_make_symbolicon the buffer - Writing the (now symbolic) buffer back to the original input file

We’ll take a similar approach, except we’ll modify step (3) to:

- Invoke the

KaitaiStructplugin to selectively make the buffer symbolic

To do this we’ll add the following directories/files to our S2E environment:

source/s2e/guest/common/s2e_kaitai_cmd/s2e_kaitai_cmd.csource/s2e/guest/common/include/s2e/kaitai/commands.h

I’ll skip steps 1, 2 and 4 because they have already been implemented in

s2ecmd.

For step 3, we’ll create a custom S2E command to invoke a plugin (described

later) that will selectively make the input file symbolic. The command

structure should be placed in

source/s2e/guest/common/include/s2e/kaitai/commands.h. It follows the

standard method for

invoking

S2E

plugins

from the guest:

enum S2E_KAITAI_COMMANDS {

KAITAI_MAKE_SYMBOLIC,

};

struct S2E_KAITAI_COMMAND_MAKE_SYMBOLIC {

// Pointer to guest memory where the symbolic file has been loaded

uint64_t InputFile;

// Size of the input file (in bytes)

uint64_t FileSize;

// 1 on success, 0 on failure

uint64_t Result;

} __attribute__((packed));

struct S2E_KAITAI_COMMAND {

enum S2E_KAITAI_COMMANDS Command;

union {

struct S2E_KAITAI_COMMAND_MAKE_SYMBOLIC MakeSymbolic;

};

} __attribute__((packed));

We can then add the following function to s2e_kaitai_cmd.c. This function

takes a pointer to the file contents (which have been read into a buffer) and

the size of the buffer (determined with lseek), constructs the relevant

command and sends this command to S2E.

static inline int s2e_kaitai_make_symbolic(const uint8_t *buffer, unsigned size) {

struct S2E_KAITAI_COMMAND cmd = {0};

cmd.Command = S2E_KAITAI_MAKE_SYMBOLIC;

cmd.MakeSymbolic.InputFile = (uintptr_t) buffer;

cmd.MakeSymbolic.FileSize = size;

cmd.MakeSymbolic.Result = 0;

s2e_invoke_plugin("KaitaiStruct", &cmd, sizeof(cmd));

return (int) cmd.MakeSymbolic.Result;

}

Now we need an S2E plugin to handle this command.

The KaitaiStruct plugin

Let’s start with a skeleton plugin (don’t forget to add

s2e/Plugins/KaitaiStruct.cpp to the add_library command in

source/s2e/libs2eplugins/src/CMakeLists.txt).

The header file:

#ifndef S2E_PLUGINS_KAITAI_STRUCT_H

#define S2E_PLUGINS_KAITAI_STRUCT_H

#include <s2e/CorePlugin.h>

#include <s2e/Plugins/Core/BaseInstructions.h>

// Forward declare the S2E command from s2e_kaitai_cmd

struct S2E_KAITAI_COMMAND;

namespace s2e {

namespace plugins {

// In addition to extending the basic Plugin class, we must also implement the

// IPluginInvoker to handle custom S2E commands

class KaitaiStruct : public Plugin, public IPluginInvoker {

S2E_PLUGIN

public:

KaitaiStruct(S2E *s2e) : Plugin(s2e) { }

void initialize();

// The method from IPluginInvoker that we must implement to respond to a

// custom command. This method takes the current S2E state, a pointer to

// the custom command object and the size of the custom command object

virtual void handleOpcodeInvocation(S2EExecutionState *state,

uint64_t guestDataPtr,

uint64_t guestDataSize);

private:

// The name of the Lua function that will run the Kaitai Struct parser

std::string m_kaitaiParserFunc;

// handleOpcodeInvocation will call this method to actually invoke the Lua function

bool handleMakeSymbolic(S2EExecutionState *state, const S2E_KAITAI_COMMAND &command);

}

} // namespace plugins

} // namespace s2e

#endif

And the CPP file:

// From source/s2e/guest/common/include

#include <s2e/kaitai/commands.h>

#include <s2e/ConfigFile.h>

#include <s2e/S2E.h>

#include <s2e/Utils.h>

#include "KaitaiStruct.h"

namespace s2e {

namespace plugins {

S2E_DEFINE_PLUGIN(

KaitaiStruct,

"Combine S2E and Kaitai Struct",

"",

// Dependencies

"LuaBindings"); // Reuse the existing Lua binding code from the

// function/instruction annotation plugins

void KaitaiStruct::initialize() {

m_kaitaiParserFunc = s2e()->getConfig()->getString(

getConfigKey() + ".parser");

}

bool KaitaiStruct::handleMakeSymbolic(S2EExecutionState *state,

const S2E_KAITAI_COMMAND &command) {

// We'll finish this later

return true;

}

void KaitaiStruct::handleOpcodeInvocation(S2EExecutionState *state,

uint64_t guestDataPtr,

uint64_t guestDataSize) {

S2E_KAITAI_COMMAND cmd;

// 1. Validate the received command

if (guestDataSize != sizeof(cmd)) {

getWarningsStream(state) << "S2E_KAITAI_COMMAND: Mismatched command "

<< "structure size " << guestDataSize << "\n";

exit(1);

}

// 2. Read the command

if (!state->mem()->read(guestDataPtr, &cmd, guestDataSize)) {

getWarningsStream(state) << "S2E_KAITAI_COMMAND: Failed to read "

<< "command\n";

exit(1);

}

// 3. Handle the command

switch (cmd.Command) {

case KAITAI_MAKE_SYMBOLIC: {

bool success = handleMakeSymbolic(state, cmd);

cmd.MakeSymbolic.Result = success ? 0 : 1;

// Write the result back to the guest

if (!state->mem()->write(guestDataPtr, cmd, guestDataSize)) {

getWarningsStream(State) << "S2E_KAITAI_COMMAND: Failed to "

<< " write result to guest\n";

exit(1);

}

} break;

default: {

getWarningsStream(state) << "S2E_KAITAI_COMMAND: Invalid command "

<< hexval(cmd.Command) << "\n";

exit(1);

}

}

}

} // namespace plugins

} // namespace s2e

Our plugin has only one dependency: the LuaBindings plugin. This plugin

configures S2E’s Lua interpreter and allows us to call Lua code in the S2E

configuration file.

The handleOpcodeInvocation method follows a similar pattern to other plugins

that implement IPluginInvoker (e.g.

FunctionModels

and LinuxMonitor):

- Validate the received command by checking its size.

- Read the command. Since the command is issued by the guest, it resides in guest memory. Nothing in our command should be symbolic (remember it only contains the input file’s start address and size), so we can read this memory concretely.

- Handle the command. In this case we call another function (that we’ll discuss shortly) to invoke the Lua interpreter to parse the input file.

- Indicate success/failure to the guest. We do this by setting a “return code” in the command structure and writing the command back into guest memory.

Let’s finish handleMakeSymbolic. Since we are working with Lua code we must

include some extra header files:

#include <vector>

#include <s2e/Plugins/Lua/Lua.h>

#include <s2e/Plugins/Lua/LuaS2EExecutionState.h>

And finally the function implementation:

bool KaitaiStruct::handleMakeSymbolic(S2EExecutionState *state,

const S2E_KAITAI_COMMAND &command) {

uint64_t addr = command.MakeSymbolic.InputFile;

uint64_t size = command.MakeSymbolic.FileSize;

std::vector<uint8_t> data(size);

// Read the input file's contents from guest memory

if (!state->mem()->read(addr, data.data(), sizeof(uint8_t) * size)) {

return false;

}

// Get the Lua interpreter's state

lua_State *L = s2e()->getConfig()->getState();

// Wrap the current S2E execution state

LuaS2EExecutionState luaS2EState(state);

// Turn the input file into a Lua string

luaL_Buffer luaBuff;

luaL_buffinit(L, &luaBuff);

luaL_addlstring(&luaBuff, (char*) data.data(), sizeof(uint8_t) * size);

// Set up our function call on Lua's virtual stack

lua_getglobal(L, m_kaitaiParserFunc.c_str());

Lunar<LuaS2EExecutionState>::push(L, &luaS2EState);

lua_pushinteger(L, addr);

luaL_pushresult(&luaBuff);

// Call our Kaitai Struct parser function

lua_call(L, 3, 0);

return true;

}

Hopefully this is relatively easy to understand (see here for more information on Lua’s C API). First we read the input file into a Lua string for the Kaitai Struct parser. Then, we call the Kaitai Struct parser function (which we’ll define in the following section).

We must set up the parser function’s arguments before we can call it. A virtual

stack is used to pass values to Lua functions. The first item pushed onto this

stack is always the function name. Our parser function will be defined in Lua’s

global namespace (for simplicity), so we can use lua_getglobal to retrieve

this function from the S2E configuration file and push it onto the stack. We

then push:

- The current S2E execution state;

- The input file’s start address (in guest memory); and

- The contents of the input file (as a string).

Now all that’s left to do is to implement this parser in the S2E configuration file.

Lua scripts

First, we need to compile a Kaitai Struct format definition into a Lua parser. Since we are going to be experimenting with readelf, let’s create our readelf project now and get the ELF definition from the Kaitai Struct gallery:

# Create the S2E project

s2e new_project -n readelf_kaitai readelf -h @@

cd projects/readelf_kaitai

# Get the ELF Kaitai Struct definition and compile it

wget https://raw.githubusercontent.com/kaitai-io/kaitai_struct_formats/master/executable/elf.ksy

ksc -t lua elf.ksy

This will generate elf.lua. Now let’s try it out on a testcase from

AFL. If you don’t already have it, you’ll

also need Kaitai Struct’s Lua

runtime:

# Get Kaitai Struct's Lua runtime

git clone https://github.com/kaitai-io/kaitai_struct_lua_runtime lua_runtime

# Get the ELF testcase

wget https://raw.githubusercontent.com/mirrorer/afl/master/testcases/others/elf/small_exec.elf

# Parse the testcase

lua5.3 - << EOF

package.path = package.path .. ";./lua_runtime/?.lua"

require("elf")

inp = assert(io.open("small_exec.elf", "rb"))

testcase = Elf(KaitaiStream(inp))

print("testcase e_ehsize: " .. testcase.header.e_ehsize)

EOF

You should see a header size of 52 bytes (you can run

readelf -h small_exec.elf to confirm).

I originally stated that we’d be using Kaitai Struct’s processing spec to

target specific file attributes to make symbolic. Let’s define this processing

spec in lua_runtime/s2e_make_symbolic.lua:

local class = require("class")

S2eMakeSymbolic = class.class()

function S2eMakeSymbolic:_init(s2e_state, start_addr, curr_pos, name)

self._state = s2e_state

self._addr = start_addr + curr_pos

self._name = name

end

function S2eMakeSymbolic:decode(data)

local mem = self._state:mem()

local size = data:len()

-- The decode routine is called after the data has already been read, so we

-- must return to the start of the data in order to make it symbolic

local addr = self._addr - size

mem:makeConcolic(addr, size, self._name)

-- Return the data unchanged

return data

end

All we’ve done here is define a new class, S2eMakeSymbolic, with a

constructor (_init) and a decode method. The constructor takes the

following arguments:

- The current S2E execution state;

- The input file’s start address (in guest memory);

- The current position of the parser. This plus the start address tell us the absolute memory address to make symbolic; and

- The name of the symbolic variable.

The ELF parser will automatically call decode when it encounters an attribute

with the s2e_make_symbolic processing spec applied. However, the decode

method is called after the data has been read from the input file, so we must

compensate for this when we make the data symbolic (by subtracting the size

of the memory region we just read).

Let’s make something symbolic. We’ll choose something simple for now — the ELF

header’s e_machine field. In elf.ksy the e_machine field is defined under

the endian_elf type:

# The original definition of the e_machine field

- id: machine

type: u2

enum: machine

Processing specs can only be applied to byte arrays, so we must replace the

type specification with a byte array’s size specification. Because the

original type was an unsigned 2-byte value, we can simply treat the machine

as a byte array of size 2. We must also remove the enum mapping, otherwise

ksc will raise a compilation error when it tries to apply an enum to a byte

array.

# Redefinition of the e_machine field to make it symbolic

- id: machine

size: 2

process: s2e_make_symbolic(s2e_state, start_addr, _io.pos, "machine")

Finally, we must propagate two additional arguments — the S2E execution state

and the input file’s start address — from the parser’s constructor to

s2e_make_symbolic. We do this with the “params spec”. The machine attribute

is nested under the endian_elf and top-level elf types, so the following

params spec must be defined under both.

params:

- id: s2e_state

- id: start_addr

We must also modify the header’s type from endian_elf to

endian_elf(s2e_state, start_addr). This ensures that the two arguments are

passed to endian_elf’s constructor. (If this is all getting a bit confusing,

remember that the full source code is available

here).

# The original header's type

- id: header

type: endian_elf

# Redefined to propagate the S2E execution state and input file's start address

# to the endian_elf type

- id: header

type: endian_elf(s2e_state, start_addr)

Now recompile elf.ksy. If you open elf.lua you should see that the

constructor (Elf:_init) now takes s2e_state and start_addr as its first

two arguments. These arguments are saved and later propagated to the

S2eMakeSymbolic constructor via the Elf.EndianElf constructor.

All that’s left to do is write a small function in our S2E configuration file

that will instantiate and run our parser. This function is invoked by the

handleMakeSymbolic method in the KaitaiStruct plugin.

package.path = package.path .. ";./lua_runtime/?.lua"

local stringstream = require("string_stream")

require("elf")

function make_symbolic_elf(state, start_addr, buffer)

local ss = stringstream(buffer)

-- This will kick-start the parser. We don't care about the final result

Elf(state, start_addr, KaitaiStream(ss))

end

-- Enable and configure the necessary plugins

add_plugin("LuaBindings")

add_plugin("KaitaiStruct")

pluginsConfig.KaitaiStruct = {

parser = "make_symbolic_elf",

}

And we’re done!

Experimenting with readelf

We’re finally ready to experiment with readelf. Before we do, modify the S2E configuration file so that only the following plugins are enabled:

- BaseInstructions

- HostFiles

- Vmi

- TranslationBlockCoverage

- ModuleExecutionDetector

- ForkLimiter

- ProcessExecutionDetector

- LinuxMonitor

We’ll also have to modify bootstrap.sh. Under ${S2EGET} "readelf" add

${S2EGET} "small_exec.elf" to copy our testcase to the guest. In the

prepare_inputs function replace truncate -s 256 ${SYMB_FILE} with

cp small_exec.elf ${SYMB_FILE} to use our testcase. We won’t replace the

symbfile command yet; let’s get an initial idea of how readelf performs on a

fully symbolic file.

Run S2E for a minute or so before killing it. You should see many forked states (I forked 136 states). Let’s generate code coverage information:

# The actual disassembler isn't important

s2e coverage basic_block --disassembler=binaryninja readelf_kaitai

Where are these forks occurring? Many are in libc, due to readelf calling

printf on symbolic data. What about the forks that occur in readelf? The

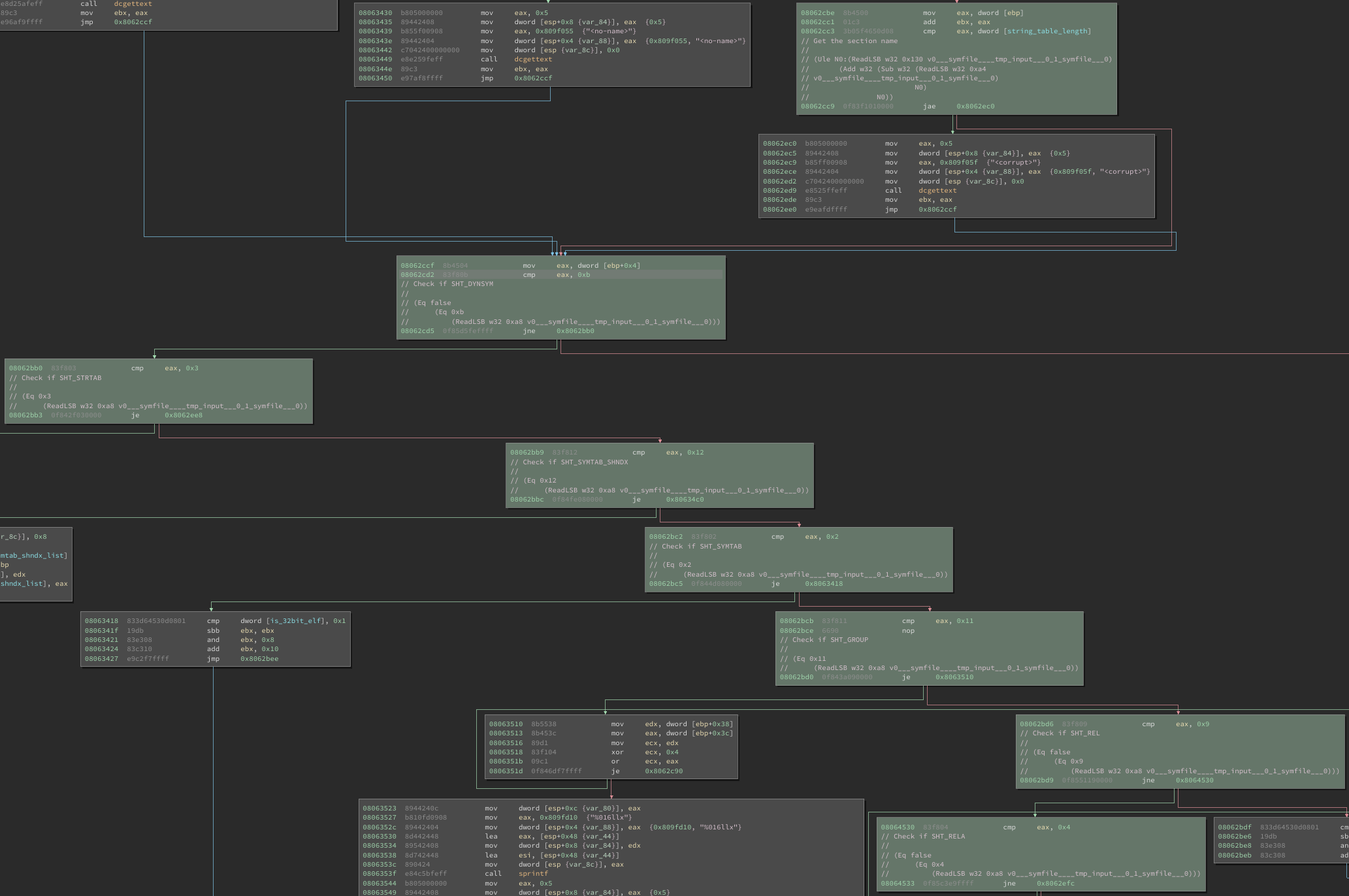

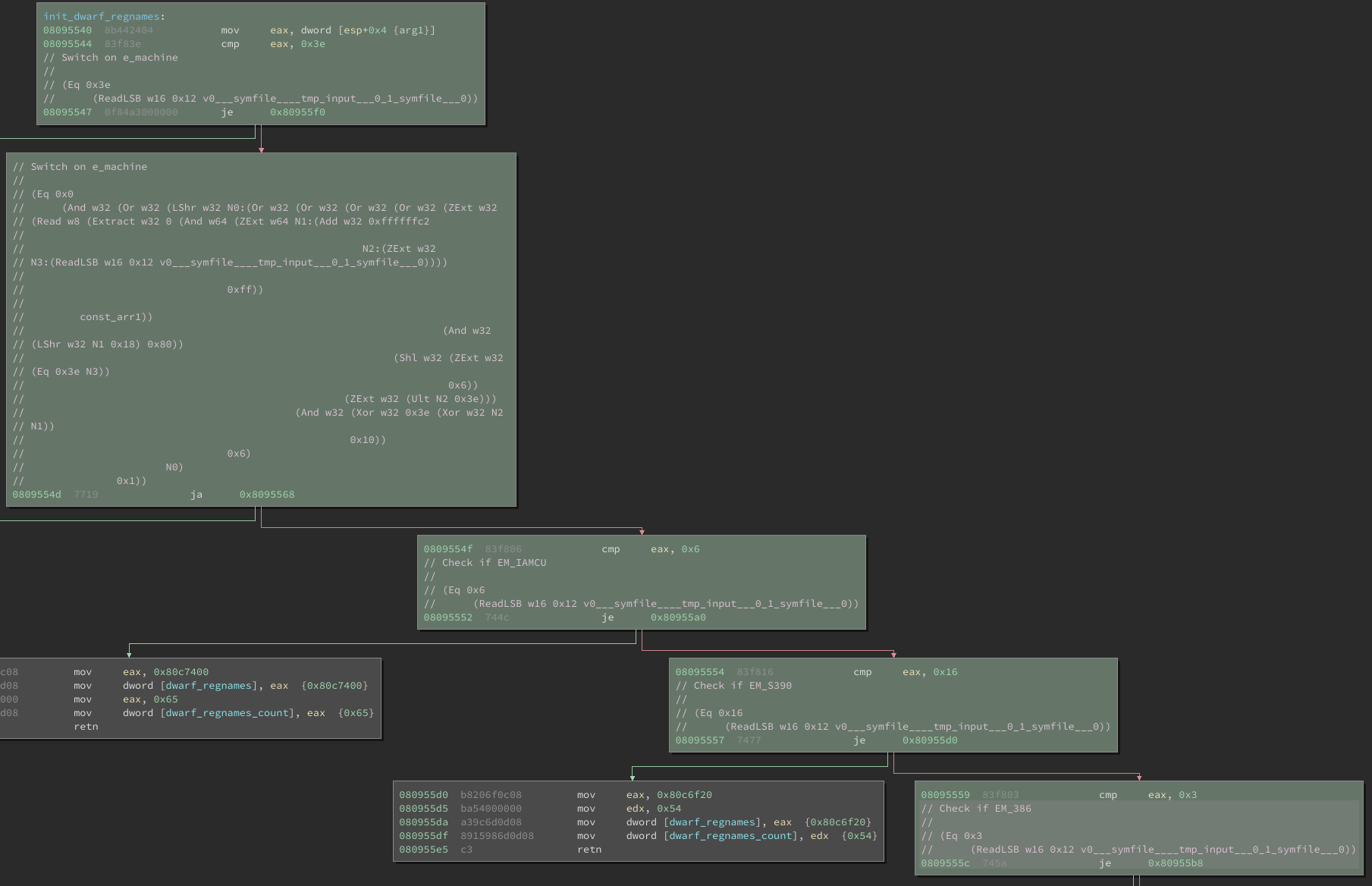

following images show snippets of two functions from readelf:

process_section_headers and init_dwarf_regnames. Green basic blocks

indicate blocks that were executed by S2E. Fork points have also been annotated

with their constraints (in KLEE’s

KQuery format):

readelf’s process_section_headers code coverage

readelf’s init_dwarf_regnames code coverage

Forking also occurs when checking:

- If the input file is an archive

- The data encoding (little endian or big endian)

- The section header table’s file offset

- If each section’s

sh_linkandsh_infovalues are valid

And at many other locations! Now let’s try and reduce forking to only those

program paths that relate to the ELF header’s e_machine field. Edit

bootstrap.sh and replace ${S2ECMD} symbfile ${SYMB_FILE} with

./s2e_kaitai_cmd ${SYMB_FILE}. Now rerun S2E for a minute. During my run,

forking was limited to the get_machine_name and init_dwarf_regnames

functions, both of which have switch statements based on the value of

e_machine. Success!

Let’s try and target a different field in the ELF file — the section header’s

sh_type. Unlike the e_machine field, which only occurs once in the ELF

file, the sh_type can occur multiple times throughout the file (depending on

the number of sections in the ELF file).

Once again, we must propagate the S2E execution state and input file’s start

address to the appropriate attribute in the ELF declaration. This time we must

add the params spec to the section_header type. The type attribute is

defined as an unsigned 4-byte enum, so we must change this to a 4 byte array so

that we can apply s2e_make_symbolic:

# Elf(32|64)_Shdr

section_header:

params:

- id: s2e_state

- id: start_addr

seq:

# sh_name

- id: name_offset

type: u4

# sh_type

- id: type

size: 4

process: s2e_make_symbolic(s2e_state, start_addr, _io.pos, "sh_type")

# ...

We must also ensure that these two arguments are passed to the

SectionHeader’s constructor. The section header can be found under the

section_headers instance:

# The original section_headers

section_headers:

pos: section_header_offset

repeat: expr

repeat-expr: qty_section_header

size: section_header_entry_size

type: section_header

# Redefined for symbolic execution

section_headers:

pos: section_header_offset

repeat: expr

repeat-expr: qty_section_header

size: section_header_entry_size

type: section_header(s2e_state, start_addr)

Notice that section_headers is declared as an

“instance spec”. This

means that section_headers compiles to a function that will only parse the

section header on demand. Therefore we must access the section_headers to

force them to be parsed. To do this we must modify the make_elf_symbolic

function in s2e-config.lua:

function make_symbolic_elf(state, start_addr, buffer)

-- ...

-- This will kick-start the parser. However, now we do care about the final

-- result, because we must access the section headers to force them to be

-- parsed

local elf_file = Elf(state, start_addr, KaitaiStream(ss))

-- This will kick-start the section header parser

_ = elf_file.header.section_headers

end

Run ksc to regenerate elf.lua. Before we rerun S2E, let’s take a look at

elf.lua. In particular, the parsing of the section headers in the

section_headers’ get method:

function Elf.EndianElf.property.section_headers:get()

-- ...

for i = 1, self.qty_section_header do

self._raw__m_section_headers[i] = \

self._io:read_bytes(self.section_header_entry_size)

local _io = KaitaiStream(stringstream(self._raw__m_section_headers[i]))

self._m_section_headers[i] = Elf.EndianElf.SectionHeader(self.s2e_state,

self.start_addr,

_io, self,

self._root,

self._is_le)

end

-- ...

end

Notice that ksc creates a local variable, _io, that gets passed to the

SectionHeader constructor. This _io variable contains the raw data that

will eventually be transformed into a SectionHeader object. Unfortunately,

this causes a problem for the s2e_make_symbolic processing spec.

Recall that the parser’s current position (_io.pos) is passed to the

s2e_make_symbolic processing spec. Unfortunately, when the local _io stream

is created, this position is reset to zero, so using this position would result

in an incorrect memory address being made symbolic. Fortunately, we can fix

this with a small change to the Lua code:

for i = 1, self.qty_section_header do

-- Get the absolute start address of the section header before it is parsed

local _sec_hdr_start_addr = self.start_addr + self._io:pos()

self._raw__m_section_headers[i] = \

self._io:read_bytes(self.section_header_entry_size)

local _io = KaitaiStream(stringstream(self._raw__m_section_headers[i]))

-- Use the section header's start address instead of the ELF's start address

self._m_section_headers[i] = Elf.EndianElf.SectionHeader(self.s2e_state,

_sec_hdr_start_addr,

_io, self,

self._root,

self._is_le)

end

Yes, hacking the generated Lua code is kind of disgusting. However, it ensures

that the correct memory address is made symbolic. When I reran S2E, forking was

limited to sh_type comparisons in the process_section_headers function.

Success again!

Conclusions and future work

In this post I’ve looked at how we can perform more targeted symbolic execution of file parsers. Rather than giving the parser a fully-symbolic input file (which quickly leads to path explosion), we can use Kaitai Struct to target specific parts of the input file to make symbolic. While this approach seems to work, there are a few problems associated with it.

First, it relies on the user having a valid seed file to perform symbolic

execution with. This seed file must also contain data for the part of the

parser that we wish to exercise. For example, let’s assume that we wanted to

apply this technique to a PNG parser. If we took

this definition of a PNG file and

wanted to see what happened when the bkgd_truecolor attribute was made

symbolic, our PNG seed would also have to contain a background color

chunk.

Otherwise our parser would have nothing to make symbolic.

For similar reasons, we cannot just use the “empty” symbolic file that the S2E

bootstrap script creates. Why? Because when the Kaitai Struct parser executes,

it runs on concrete data contained in the file. The default symbolic file that

S2E creates is filled with NULL characters, so the parser would fail

instantly. Wouldn’t it be cool if we could pull files out of thin

air?

Other issues stem from how we are using Kaitai Struct. This is not the fault of

Kaitai Struct; in fact the Kaitai Struct

FAQ explicitly states that the generated

parsers are not designed for this model of “event-based” parsing. We could have

modified ksc to generate code that required fewer manual modifications (e.g.

automatically generate the params spec, use non-lazy instance specs, always

keep track of the parser’s absolute position, etc.), however for simplicity I

wanted to leave Kaitai Struct “as is”.

What about non file-based symbolic execution? For example, in my previous post I showed how to use S2E to solve a CTF

challenge that used a command-line string as input. The approach described in

this post would not help in solving this CTF challenge. However, there is no

reason why the KaitaiStruct plugin could not be extended to work on

command-line strings. For example, we could define the CTF challenge’s input

string in Kaitai Struct as follows:

meta:

id: ctf-input

title: Google CTF input format

ks-version: 0.8

seq:

- id: prefix

size: 4

contents: "CTF{"

- id: to_solve

size: 63 # total length of 67 bytes minus the 4 byte prefix

process: s2e_make_symbolic(s2e_state, start_addr, _io.pos, "to_solve")

params:

- id: s2e_state

- id: start_addr

With some additional code we could run this parser on the input string to make

only the last 63 bytes symbolic. This would allow us to remove the

onSymbolicVariableCreation method from the S2E

plugin.

Despite these problems, combining S2E and Kaitai Struct seemed to work fairly well for the work that I was doing (although your mileage may vary!). We could probably get around these problems with a bit more work (and a lot more code). However, I think I’ll save that for a future post :)